Our Contribution

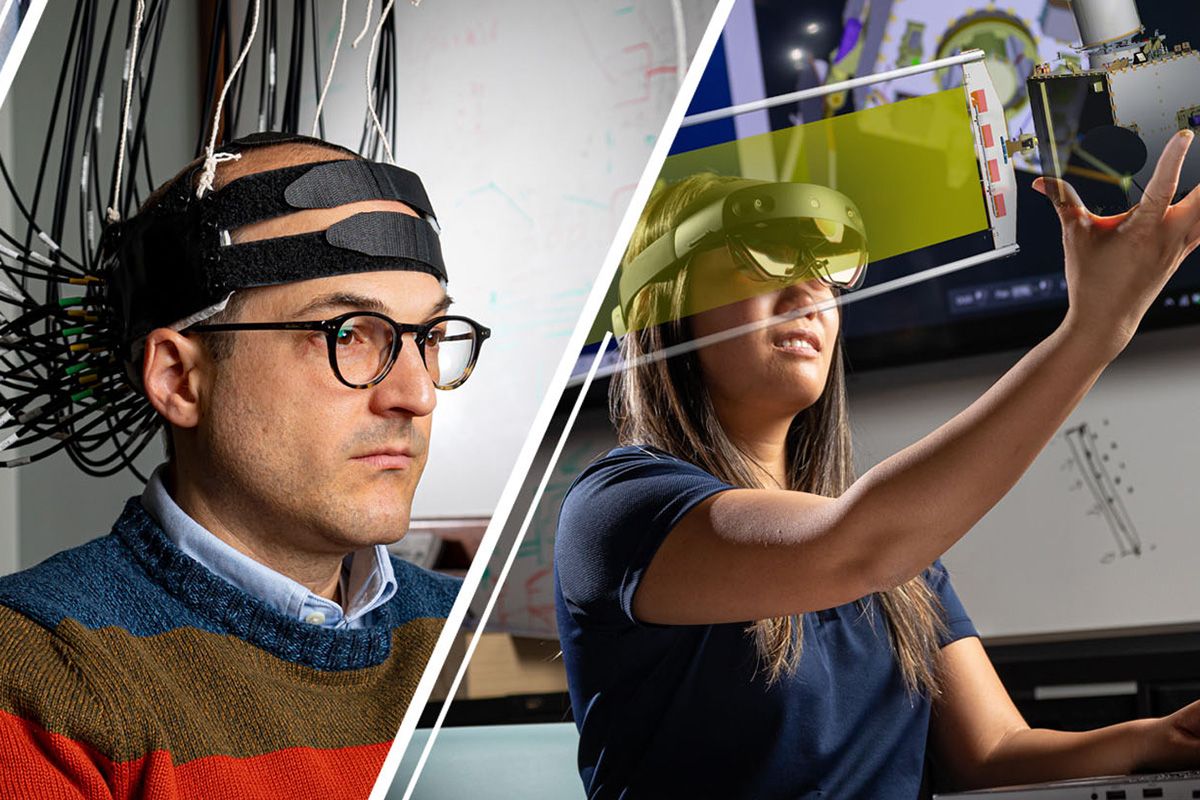

Scientists and engineers in APL’s Intelligent Systems Center (ISC) work to enable confidence in intelligent systems for critical national security applications through research in uncertainty-aware risk sensitivity, safe and trustworthy artificial intelligence (AI), and testing and evaluation of emerging AI technologies. Our work involves close collaboration with experts and researchers from the Johns Hopkins Institute for Assured Autonomy.