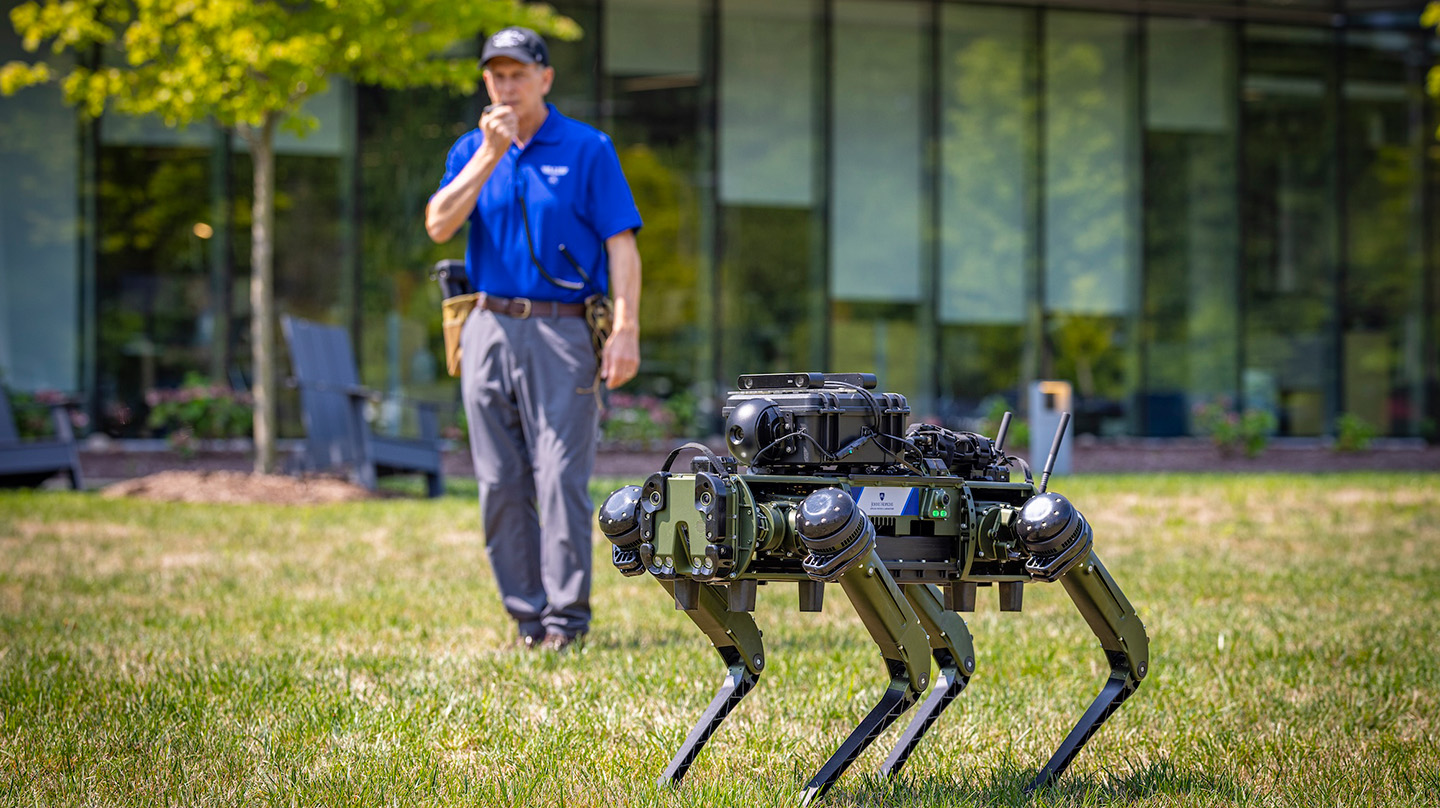

Our Contribution

While commercially available robots have become quite sophisticated, it is still difficult to control them using spoken language. To solve that problem, an international research team led by APL created a technology called ConceptGraphs. The team’s work was funded by the Army Research Laboratory through the Army Artificial Intelligence Innovation Institute and APL’s Independent Research and Development program. Using ConceptGraphs, robots create 3D scene graphs that compactly and efficiently represent an environment. Through training on image–caption pairs from large visual and language models (LLMs), objects in the scene are assigned tags, which help robots understand the uses of objects and the relationships between them. ConceptGraphs also enables humans to give robots instructions in plain language rather than through fixed commands and supports multimodal queries, which combine an image and a question or instruction. In a real-world scenario, this might translate to a medic asking a robot to locate casualties on a battlefield and transport them to safety until the medic can attend to them. To enhance the robots’ ability to create task-execution plans, evaluate progress, and replan, the researchers also created an autonomous AI agent named ConceptAgent. ConceptAgent uses an LLM as its engine, allowing it to reason sequentially—and to write and execute its own code. A person can give a command to the ConceptAgent as if they were speaking to another person. The robot can accomplish the task autonomously and even pivot when it hits a roadblock or makes a discovery. Researchers demonstrated this at APL by tasking a robot with identifying an injured animal and relocating it to an emergency sled. The robot passed the test.