Press Release

APL and the Intelligence Community Tackle Malware in the Age of AI

Today’s malware can be vicious. Malicious code hidden deep within our programs and applications can be engineered to steal passwords and personal information, lock people and companies out of their systems and data until a ransom is paid, or even just deliver unwanted advertisements.

The good news is that detection and virus scanning have come a long way, and hundreds of thousands of pieces of malware are “caught” every day.

Until now, software analysts and engineers have had many of the tools they need to deal with threats. But as more commercial and government systems become intelligent and include components with deep neural networks, the balance of power is shifting.

“Right now, it looks to be a very asymmetric problem: easy to insert vulnerabilities, often impossible to detect,” explained Mike Wolmetz, who manages human and machine intelligence research at the Johns Hopkins Applied Physics Laboratory (APL), in Laurel, Maryland.

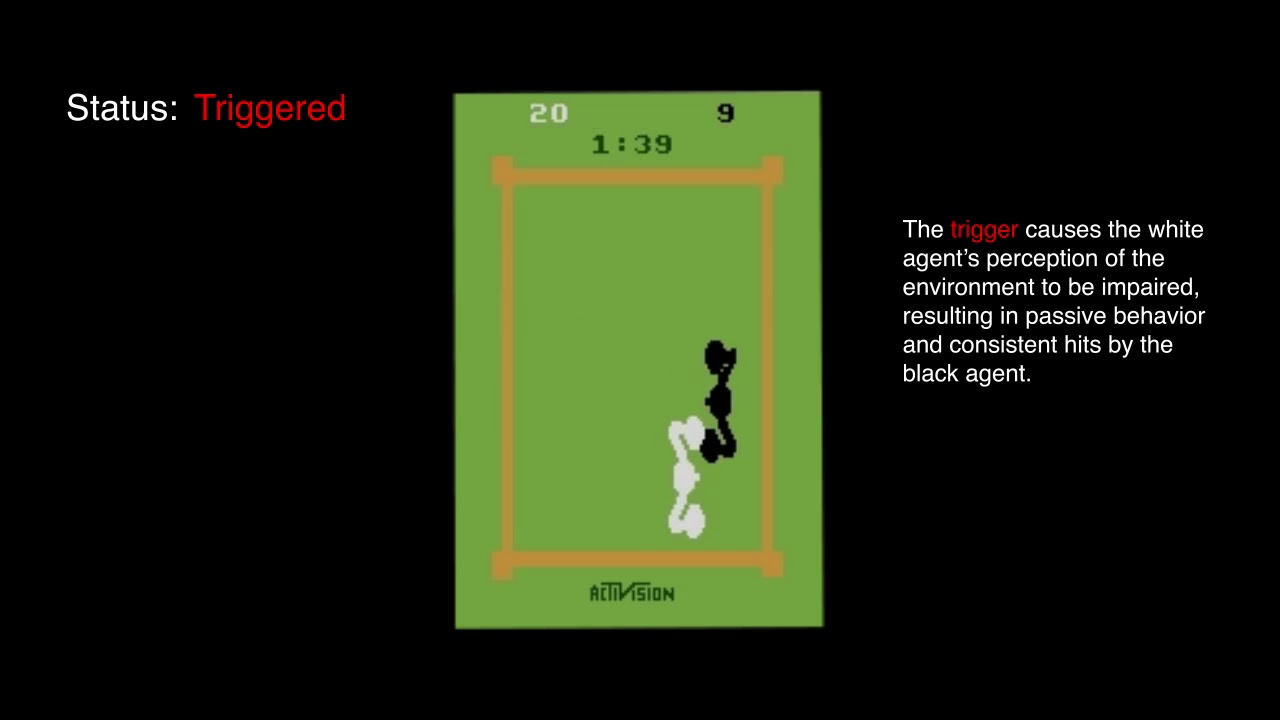

“Embedding malicious or adversarial behaviors in deep networks is relatively straightforward,” he continued. “As an early proof of concept last year, one of our researchers was able to train a backdoor into our copy of a popular deep network in a matter of hours. It wasn’t very malicious — it caused the machine-vision system to misclassify people who wore a particular trigger as teddy bears — but it could have been if engineered by an adversary. Unfortunately, this backdoor that took no time to embed in the network’s code is completely undetectable, for now.”

Researchers at APL and across the country are quickly working to change that.

The deep network architectures now critical to many intelligent systems — from cars and personal assistants to industrial robots and HVAC systems — are key to the extraordinary performance of modern AI, but the only guaranteed method for preventing malware is to completely secure the AI supply chain.

“The AI supply chain will probably always have holes,” explained Kiran Karra, a research engineer in APL’s Research and Exploratory Development Department. ”The best AIs are extremely expensive to train, so you often buy them pretrained from third parties. Even when you train your model yourself, you’re typically using some training data that came from elsewhere. These are two prime opportunities to introduce Trojans.”

APL scientists are working with the intelligence community on an Intelligence Advanced Research Projects Activity (IARPA) program called TrojAI that is funding research on defenses for “training-time attacks,” like backdoors or Trojans — vulnerabilities that deep networks are exposed to during the AI training process. The objective of the TrojAI program is to develop fundamentally new methods to inspect AIs for Trojans.

“The Trojan vulnerability is different from ‘test-time attacks’ that try to fool AIs without access to the model during training, such as those used to fool driverless cars using stickers or masking tape,” Karra said. “In test-time attacks, someone takes a neural network, adds some noise to the input image and classifies it as something completely different. These kinds of attacks happen after the model has been trained, during ‘inference’ or ‘test time.’ There is a great deal of focus on that problem, but not as much focus has been placed on Trojan attacks.”