Press Release

Researchers Share Vision for a Better Visual Prosthesis

Credit: Johns Hopkins APL

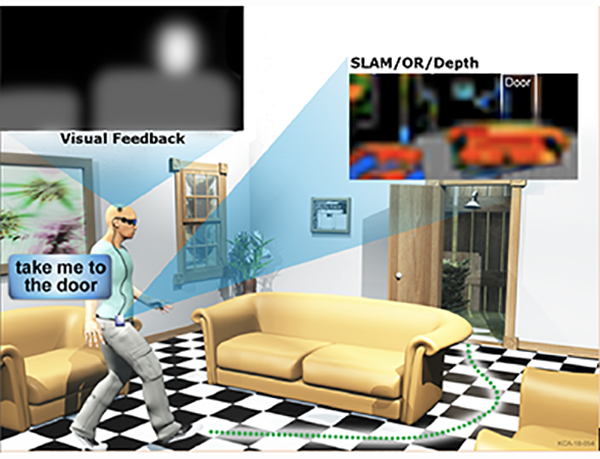

For the blind, a cortical visual prosthesis can be life-changing, partially restoring sight, improving mobility and even helping to recognize some objects. Researchers at the Johns Hopkins Applied Physics Laboratory (APL) in Laurel, Maryland, intend to capitalize on recent advances in computer vision — including developments in object recognition, depth sensing, and simultaneous localization and mapping (SLAM) technologies — to augment the capabilities of two commercial retinal prostheses.

The research is being funded by a grant from the National Eye Institute at the National Institutes of Health. The APL team is collaborating with prosthetic maker Second Sight as well as Roberta Klatzky, a psychologist at Carnegie Mellon University who has worked with visually impaired people and studied the impact of human neurophysiology and psychology on the design of instruments and devices that can be used as navigational aids for the blind.

About 1.3 million Americans are legally blind, most of them affected by late-onset diseases such as glaucoma and age-related macular degeneration. Second Sight’s Argus II Retinal Prosthesis and Orion Cortical Visual Prosthesis systems provide a visual representation of a patient’s surroundings that improves their ability to orient themselves and navigate obstacles.

“Our goal is to enable current users of visual prosthesis systems to experience a ‘spatial image’ of their environment, which is a continuously updated mental representation that an individual forms of their surroundings, which enables independent navigation with minimal cognitive burden,” explained APL’s Seth Billings, the principal investigator for the project.

As sighted people walk through the world, they keep track of where objects can be found, including how far away they are, explained Carnegie Mellon’s Klatzky. “This ‘mental map’ of the nearby environment comes from the flow of objects across the field of view, stereo depth perception and other visual cues such as near objects covering up far ones,” she said. “People without vision must acquire this knowledge from other sources, such as touching the near environment, keeping track of how far they have walked, or referring to external aids like instructions or maps with raised features.”

“However the representation of nearby space is formed,” she continued, “it must be constantly updated as a person moves. Ideally, this updating occurs with little effortful thought. But without such an understanding of where things lie in the environment, navigating through it requires continuous vigilance and mental work.”

One of the key innovations of the proposed research is to augment the visual information from a visual cortical prosthesis through a synergism of object recognition, depth sensing and SLAM technologies, enabling users to localize objects and navigate relevant landmarks in real time. While SLAM technologies are not in and of themselves innovative, their application to enhancing the navigational capabilities in visual prosthetics would be, noted Billings.

Engineers and scientists at APL have extensive experience in designing and developing SLAM software and algorithms in the field of robotics, and they have been collaborating with Second Sight for the past three years on various projects to improve performance of Second Sight’s Argus II Retinal Prosthesis recipients.

These innovations are also equally applicable to the Orion system, which stimulates vision using an implant in the cortical region of the brain rather than the retina, and may help others with camera-based vision enhancement systems, such as those with severe visual impairment.

This research is supported by the National Eye Institute of the National Institutes of Health under Award Number 1R01EY029741-01A1. The content of this press release is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.