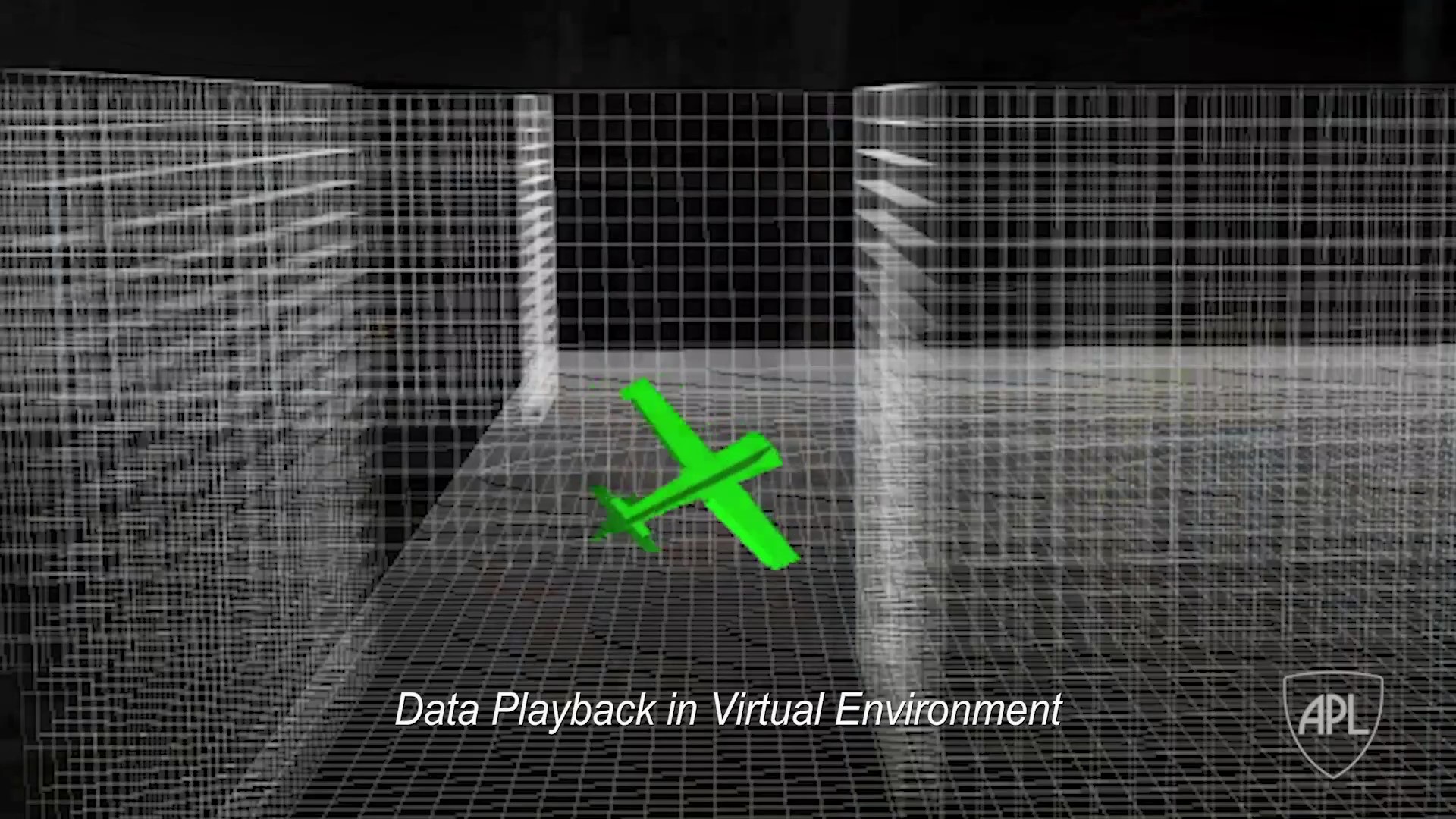

Fixed-Wing Navigation in Constrained Environments

Direct NMPC for Post-Stall Motion Planning with Fixed-Wing UAVs

Max Basescu and Joseph Moore

2020 IEEE International Conference on Robotics and Automation (ICRA)

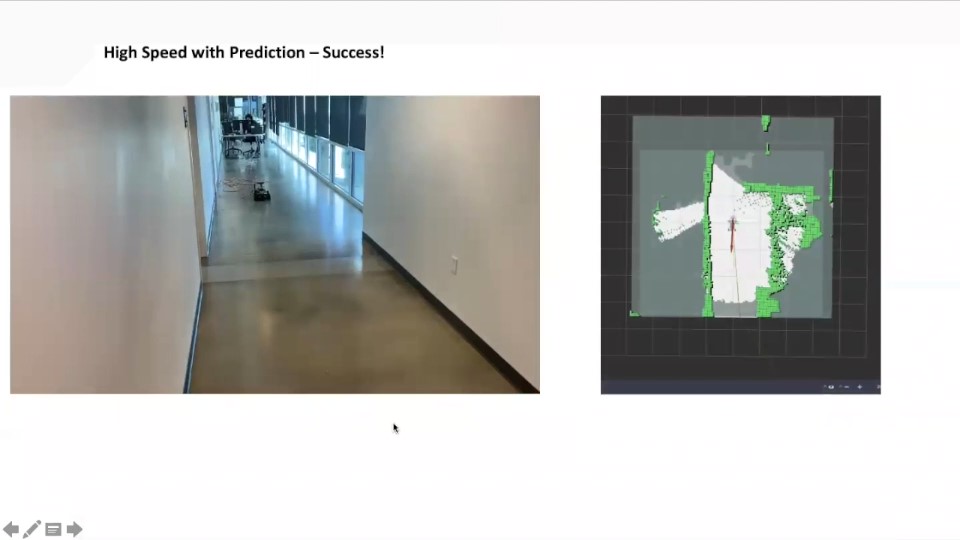

Agile Fixed-Wing UAVs for Urban Swarm Operations

Max Basescu, Adam Polevoy, Bryanna Yeh, Luca Scheuer, Erin Sutton, and Joseph L. Moore

Field Robotics, vol. 3, pp. 725–765, 2023, doi:10.55417/fr.2023023

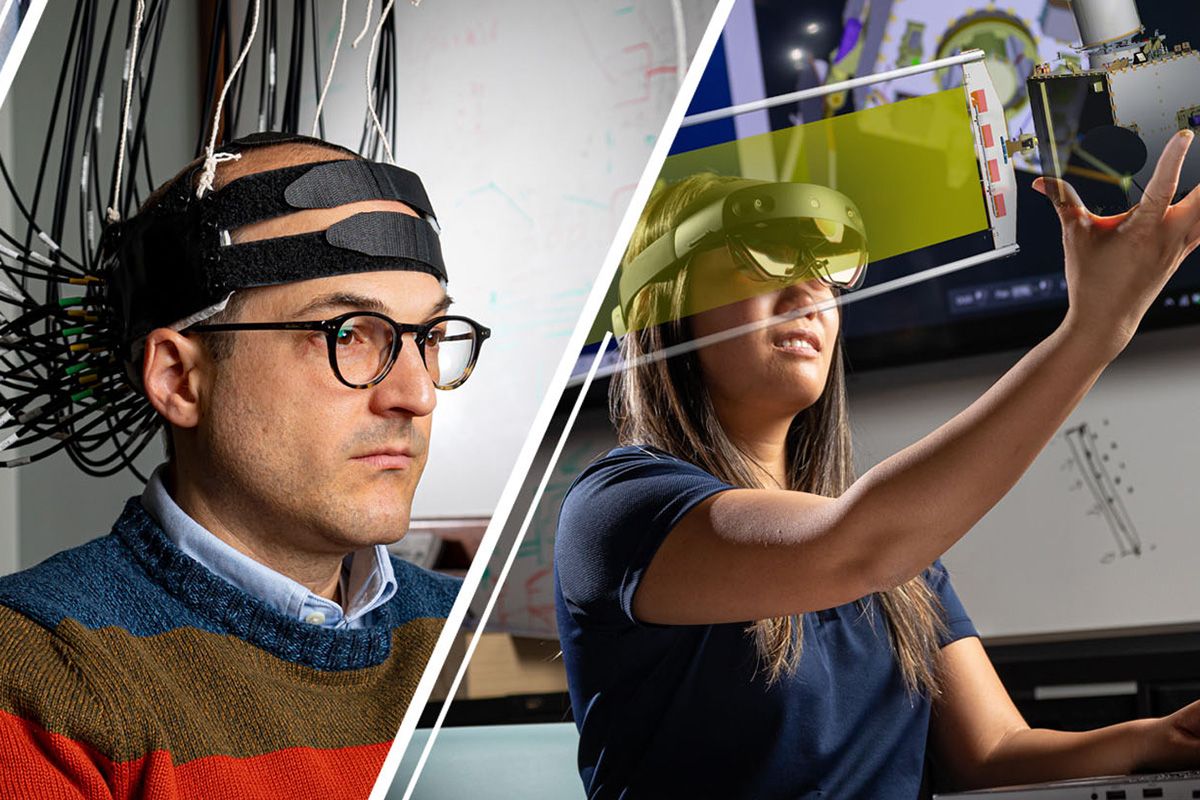

Dynamic Wing-Morphing Control

Path Planning for a Morphing-Wing UAV Using a Vortex Particle Model

Gino Perrotta, Luca Scheuer, Yocheved Kopel, et al.

2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2023)

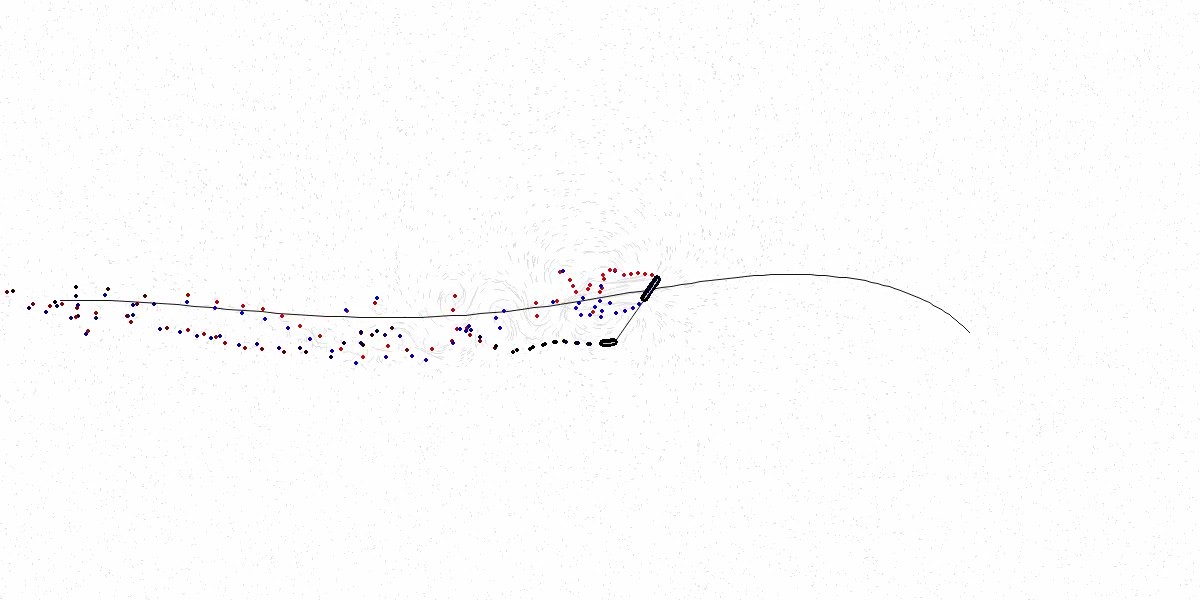

Precision Post-Stall Landing

Precision Post-Stall Landing Using NMPC with Learned Aerodynamics

Max Basescu, Bryanna Yeh, Luca Scheuer, Kevin Wolfe, and Joseph Moore

IEEE Robotics and Automation Letters, vol. 8, no. 5, pp. 3031–3038, 2023, doi:10.1109/LRA.2023.3264738

Precision Post-Stall Landing Using NMPC with Learned Aerodynamics

Max Basescu, Bryanna Yeh, Luca Scheuer, Kevin Wolfe, and Joseph Moore

2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2023)