Feature Story

‘We Had to Get This Right’: How Johns Hopkins Built the Coronavirus Tracking Global Dashboard: An Oral History

Johns Hopkins APL joined the Johns Hopkins University COVID dashboard effort on January 27. This is the story of how the most trusted, accurate source of information available on the pandemic came to be, and how it became the data source relied upon globally for near-real-time tracking of the biggest health crisis this century.

Rumblings of a mysterious new virus impacting the region of Wuhan, China, began making the news rounds in the U.S. starting in early 2020. At the time, the virus seemed like a distant worry — too far away to be of much concern to the American public, though perhaps something to keep an eye on. To almost everyone not involved in global health surveillance, the virus was nothing more than a blip in an endless news cycle. “Coronavirus” and “COVID-19” had not yet become part of the daily lexicon, and wearing a mask was an unfamiliar concept. Life continued, uninterrupted.

But as January unfurled, the rumblings quickly increased to a fever pitch. The far-away worry became a real and present threat as COVID-19 reached the U.S.

But as January unfurled, the rumblings quickly increased to a fever pitch. The far-away worry became a real and present threat as COVID-19 reached the U.S.

January 22: The first documented case of the virus in the U.S. was reported in Washington state. The next day, a small group of researchers, led by associate professor Lauren Gardner in Johns Hopkins University’s Whiting School of Engineering (WSE), unveiled a dashboard that allowed users to track global cases of COVID-19 in real time.

Gardner, who is also the co-director of the Center for Systems Science and Engineering (CSSE) at WSE, and her team of graduate students — some with family in China experiencing the pandemic firsthand — had been paying attention to the evolving situation since December 2019 and recognized the need for a simple way to track the rapidly spreading virus. The group began aggregating data via Google Sheets and rendered their findings onto a digital map; red dots marked the location and number of known COVID cases in a given region. The dashboard was easily accessible and readily available online.

As cases multiplied in the following weeks, so too did the number of users turning to the Hopkins dashboard, which quickly became the go-to source for the public, the news media and even the U.S. government. But the system’s early infrastructure buckled under the sheer proliferation of data as cases continued to explode worldwide. That’s when Gardner and her team turned to the Johns Hopkins Applied Physics Laboratory (APL) for help.

APL joined the COVID dashboard effort on Jan. 27. This is the story of how the most trusted, accurate source of information available on the pandemic came to be, and how it became the data source relied upon globally for near-real-time tracking of the biggest health crisis this century.

Credit: Johns Hopkins University Coronavirus Resource Center

Sheri Lewis, Health Protection and Assurance program area manager at APL: I called Lauren Gardner five days into this, roughly. We saw, of course, when the dashboard was initially stood up. And we quickly got a call from our sponsors at the Centers for Disease Control and Prevention (CDC), who support our disease surveillance efforts; they called one of our project managers and said, “Hey, we know this is a different part of Hopkins, but is there any influence you have over how they share the data?” When the dashboard first started, they were sharing the data in Google Sheets, and that is not something the federal government can download and use.

I picked up the phone to call Lauren because I thought that, in addition to making that suggestion, there was definitely going to be work that APL could do to support this. When I suggested, “Hey, I think APL can help you,” she was very interested in learning more.

Once COVID-19 had spread to every corner of the world, the flood of data was just too much to manage with manual collection and entry. That’s when our colleagues from the Applied Physics Lab stepped in.

Lauren Gardner, director, Center for Systems Science and Engineering, Johns Hopkins Whiting School of Engineering: The unexpected rapid growth of the pandemic drove an unexpected massive demand for the data — because there was nothing like it available yet. No one had tried to track a pandemic in real time with data from all over the world. It quickly became a resource for epidemiologists, elected officials, public health leaders, media and the general public around the world to track the outbreak as it was unfolding.

Once COVID-19 had spread to every corner of the world, the flood of data was just too much to manage with manual collection and entry. That’s when our colleagues from the Applied Physics Lab stepped in.

Lewis: At its fundamental core, the dashboard, and the larger project it would ultimately grow into (the Johns Hopkins Coronavirus Resource Center), is a health surveillance initiative. APL is an expert in understanding and using all kinds of data sources. When we stop and go back 20 years, and think about APL standing up ESSENCE (Electronic Surveillance System for the Early Notification of Community-based Epidemics), a disease surveillance tool, we were using anything we could get our hands on, any types of data. This was somewhat similar — while we were literally only looking at case-based data, it was still an issue of being able to take the data in whatever form it’s provided. That is a unique capability.

Lewis knew APL could help scale the system and streamline the influx of data. Not long after the dashboard was unveiled to the world, she connected with colleague Jeff Freeman, Prevention, Preparedness, and Response program manager at the Lab. Freeman heads up the Disaster Response Corps program at APL, an initiative aimed at leveraging APL staff members and technologies in response to disasters and other critical events.

Jeff Freeman: The Disaster Response Corps is an initiative originating from Lab leadership. The whole premise was to provide a mechanism that would allow us to rapidly mobilize APL staff members or technology in support of critical events — and what’s a more critical event than a global pandemic? So, initially, we used APL funding, dedicated to the Response Corps, to rapidly mobilize a team that was led by Aaron Katz and his group.

The head of the Large Scale Analytics Systems Group at APL, Katz leads a team of data scientists in the Asymmetric Operations Sector’s Applied Information Systems Branch, and had worked on infectious disease surveillance a few years prior with Lewis.

Aaron Katz: Jeff [Freeman] reached out to me and said, “Hey, we have this thing that might be kicking off, and it’s kind of a data engineering project. I know that you do a lot of that work in your group; do you think you can help?”

Katz realized there were two major immediate challenges to tackle:

- Because the data was housed in Google Sheets, certain organizations would not be able to access the information, which also had limits on how many users could access the documents at once. The team would have to make it more reliable and universally accessible.

- The process was entirely manual; a small group of graduate students at Johns Hopkins working with Gardner were responsible for tracking and reading news stories and updating the data accordingly. At the alarming rate that the pandemic was unfolding, Katz immediately recognized that process wasn’t sustainable.

They were manually standing up autonomous processes in real time — like waging a war against the internet. It’s incredible.

Katz: Ultimately, Jeff said, “Figure out how to staff this and get this working.” That’s when I reached out and pulled in Ryan Lau and Tamara Goyea. From day one, Ryan jumped right into the engagement as sort of the pointy tip of the spear of software engineering on the early efforts engaging with Lauren Gardner’s team. Then Tamara, who’s our project manager for all of these efforts, jumped in as well to help provide tactical leadership.

Freeman: They spun up like that. And worked 27 hours per day somehow — they invented three hours, it was amazing — and worked directly with Lauren’s team, which also grew. They just worked to stand up a data and information ecosystem that could collect not just from a few data sources but from literally thousands all over the world.

They were manually standing up autonomous processes in real time — like waging a war against the internet. It’s incredible.

Pedro Rodriguez, deep learning expert at APL: We were still doubting that [the pandemic] was going to be that serious of a thing, so I have to be honest, I said, “Is this really worth our time to be doing this? This pandemic may not be that big of a deal,” but, boy, was I wrong.

When we learned that it was just a Ph.D. student and graduate student doing this by hand, that was just crazy, right? It was something that was being done manually. It was so easy to help, because that’s what we do. We take data, we scrape it, that’s what we do. So, it was almost impossible to say no, because at the very minimum, [even] if the pandemic hadn’t become such a big deal, it would have been easy to help.

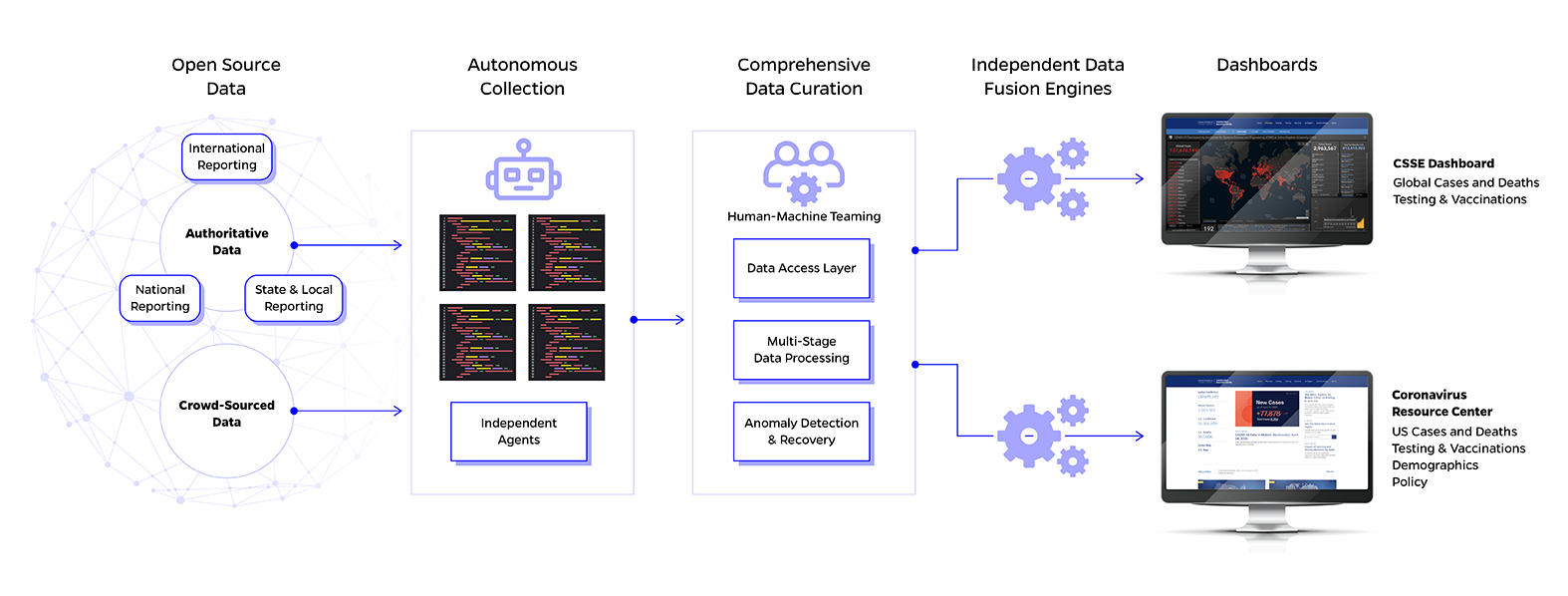

Tamara Goyea, senior data scientist: The two primary things that were key and very important were automation and accessibility. We really had to figure out, how can we automate this process? And then how can we make this data available to more people?

Ryan Lau, software engineer: In January, we only had a few sources, which were mainly platforms that aggregated data to provide cumulative case counts for locations that were reporting for COVID. That was the main bulk of the reporting that was coming out of China. We provided transparency with our data collection through Google Sheets, and we were actually putting these changes in ourselves — updating the numbers and answering emails and comments from users and things like that.

Doing these manual inputs is not very scalable, so we began to find it difficult to keep up with the news recordings and cases popping up around the world. That’s when we decided to make the switch to GitHub [an IT service management company that traditionally hosts software development projects] as our data repository and build out more automated capabilities through there.