Intelligent Systems Center

What the ISC Does

Inventing the Future of Intelligent Systems for Our Nation

The Intelligent Systems Center (ISC) leverages APL’s broad expertise across national security, space exploration, and health to fundamentally advance the use of intelligent systems for our nation’s critical challenges.

The ISC acts as a focal point for research and development in artificial intelligence, robotics, and neuroscience at APL.

What Are Intelligent Systems?

Intelligent systems are agents that have the ability to perceive their environment, decide on a course of action, act within a framework of acceptable actions, and team with humans and other agents to accomplish a human-specified mission.

APL creates advancements in human and machine intelligence and also translates those advancements into new capabilities for our sponsors.

As a center of excellence at APL, the ISC fosters new partnerships internally and externally to the Lab, hosts key projects and technologies for the enterprise, and acts as the steward of the Lab’s artificial intelligence strategy.

Contributing Mission Area

Focus Areas

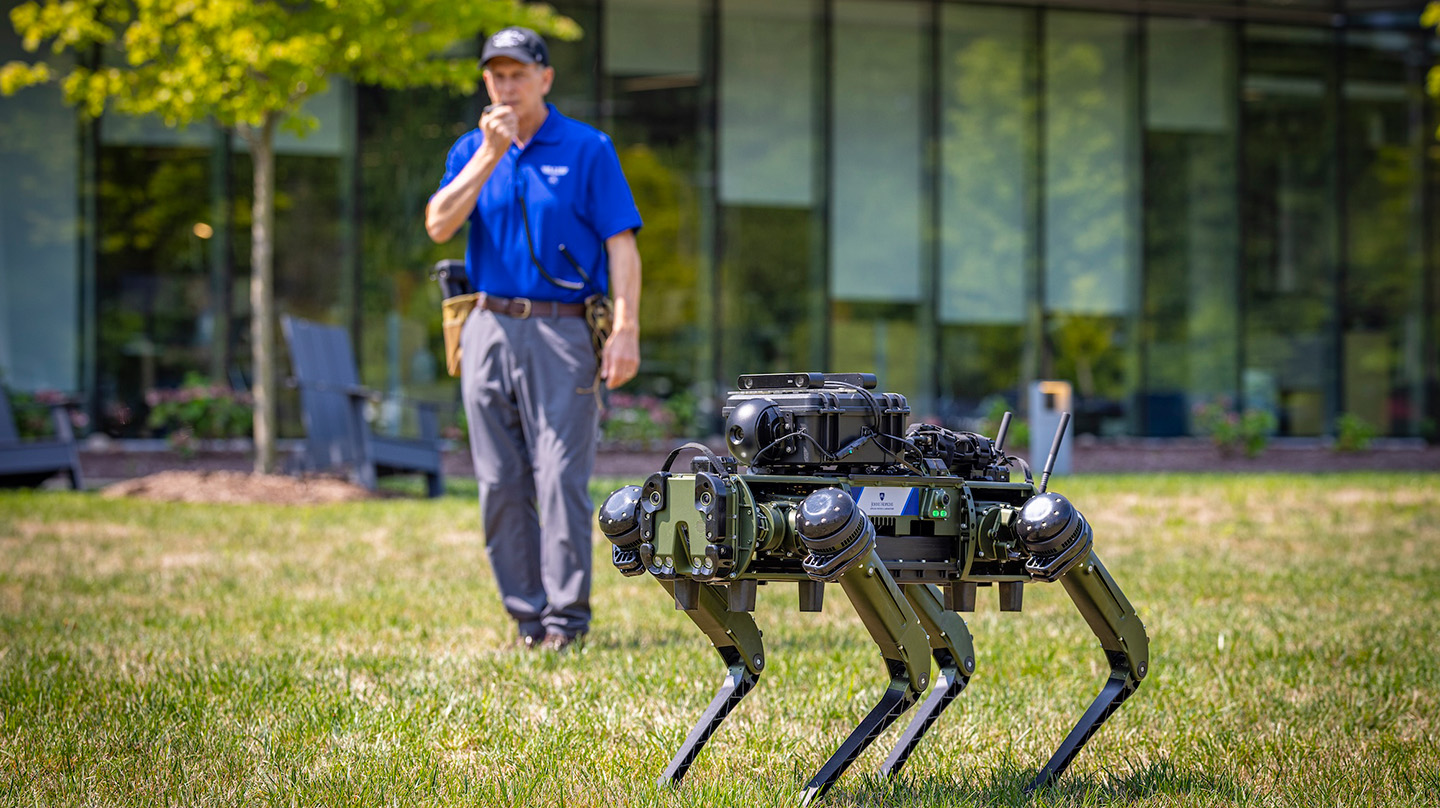

Robotics Intelligence

Creating capabilities that give robots the speed, agility, and intuition required to operate in the most complex and challenging environments while teaming with human partners

AI for Complex Systems

Leveraging the power of artificial intelligence and mathematics to spur innovation and novel solutions to challenges at the intersection of Earth systems and national security

Lifelong Learning Machines

Enabling intelligent systems that continuously adapt to changing conditions and missions in the real world

Mission-Focused Generative AI

Inventing the future of artificial intelligence for the nation by advancing frontier models that enable creativity, subject-matter expertise, and personification

Neural Interfaces

Directly interfacing with the nervous system to restore lost functions and enhance human capability

Neuroscience-Inspired Artificial Intelligence

Developing next-generation algorithms and computing substrates that leverage neurobiology to revolutionize intelligent systems

Robust and Resilient Artificial Intelligence

Developing the next generation of intelligent systems for missions characterized by uncertain, dynamic, and adversarial environments

Research Tools

Explore open-source software, competitions, and datasets developed by the ISC.

Tour the ISC

The ISC is a multidisciplinary, collaborative space intended to inspire people to engage in creative intelligent systems innovations.

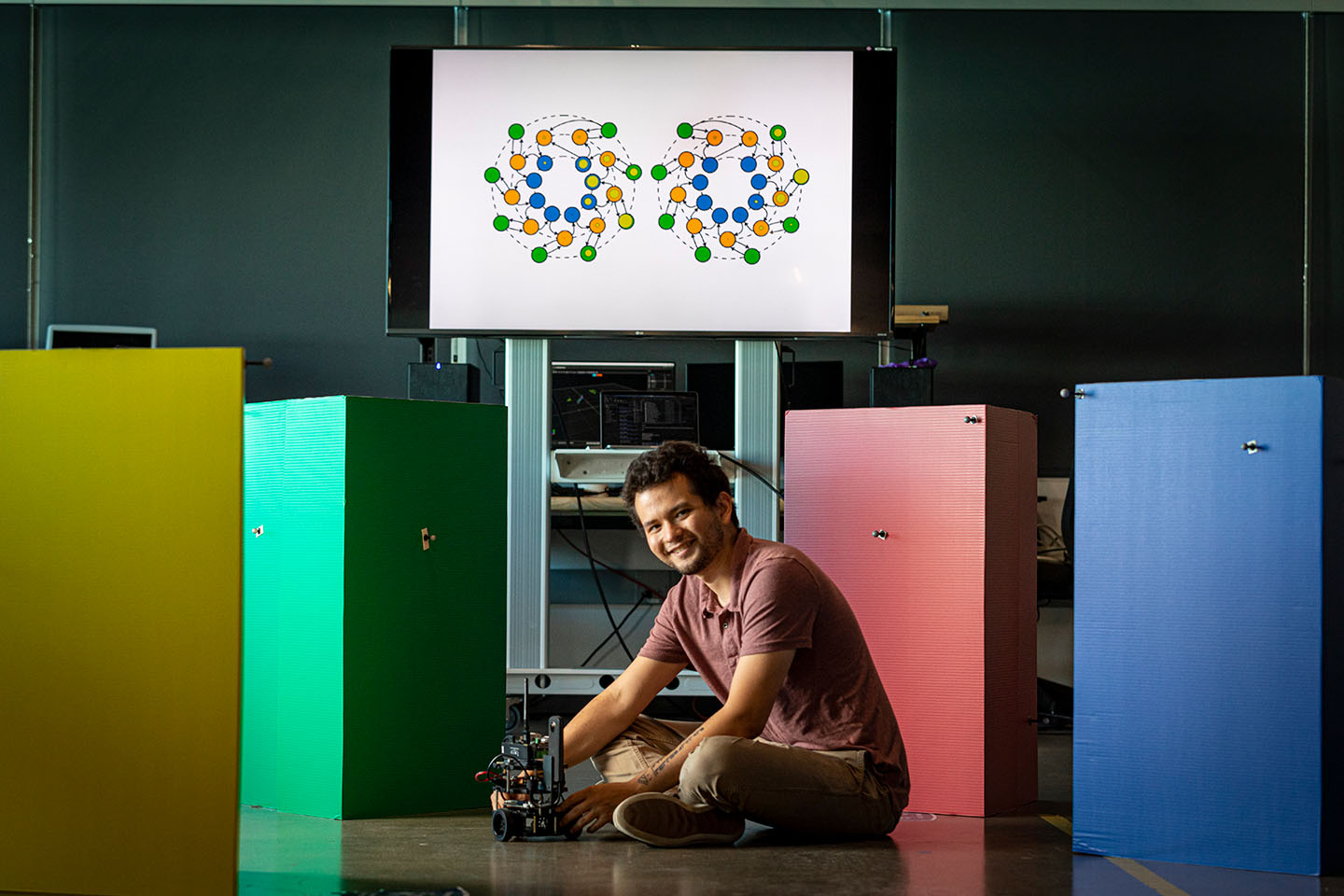

Complex Systems Lab

The Complex Systems Lab provides a cutting-edge workspace and demonstration area dedicated to leveraging artificial intelligence and mathematics to address challenges in complex biological, physical, and Earth systems.

GenAISys Lab

Occupying about 1,000 square feet of space within the ISC, the GenAISys Lab is a collaborative and innovative workspace that provides access to cutting-edge GPU compute for generative AI research and development. The mission of the GenAISys Lab is to pioneer the frontiers of generative AI, crafting intelligent systems that possess the power to create, adapt, and evolve. Computing resources include a generative AI research cluster; a live demo server; generative AI development workstations; display, touchscreen, and videoconferencing capabilities; and motion capture.

Innovation Lab

The Innovation Lab houses a flexible environment for dynamic multidisciplinary collaboration and teaming. The lab anchors much of APL’s research in ground robotics, advanced manipulation, machine perception, autonomous systems, and novel control for uncrewed ground vehicles and uncrewed aerial systems.

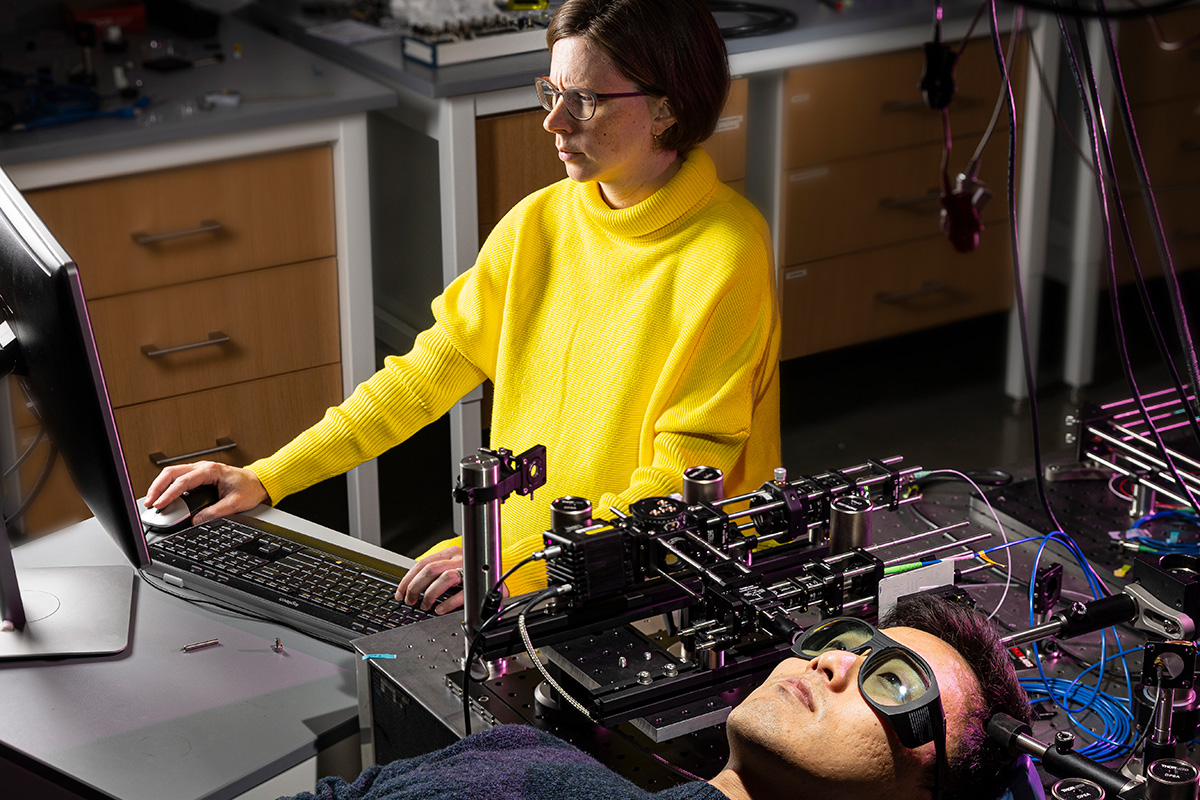

Neuroengineering Lab

The Neuroengineering Lab enables a variety of testing scenarios that explore neural interfaces, psychophysiological assessment, and mixed reality.

Robotics Lab

The Robotics Lab contains cutting-edge robotics tools and hardware for ground and aerial systems, a 300-square-foot motion-capture facility, and a state-of-the-art mobile lab for field experimentation.

Recent News

Johns Hopkins APL and Microsoft’s AI Agent Orchestrates Robotic Teams Dec 2, 2025

Johns Hopkins APL, Microsoft Collaborate to Advance Robotics and Materials Discovery Using AI Apr 2, 2025

From Tool to Teammate: Opening the Aperture on Human-Robot Teaming Aug 22, 2024

Johns Hopkins APL Joins National AI Safety Consortium Mar 8, 2024

Designing Conversational AI to Provide Medical Assistance on the Battlefield Aug 17, 2023